UA Little Rock researchers studying development of benchmarks to test machine learning

Researchers from the University of Arkansas at Little Rock are joining the ranks of technology companies and universities like Google, Intel, Stanford University, and Harvard University to create the next generation benchmark suite for machine learning.

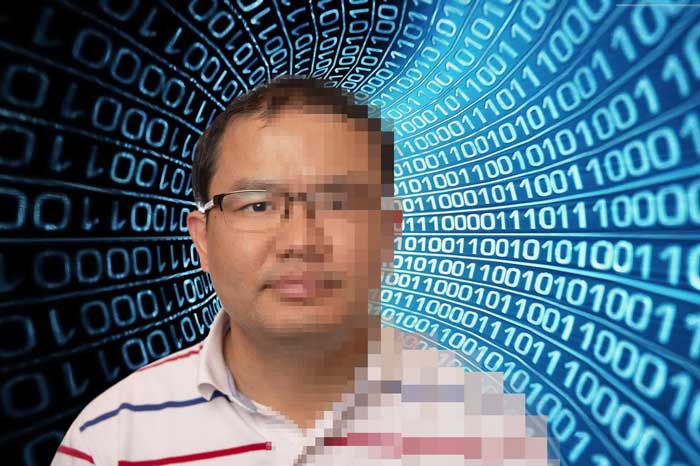

Wei “David” Dai, a doctoral candidate in information science, is pursuing dissertation research on evaluating machine learning with imperfect data. Machine learning is a field of computer science that uses statistical techniques to give computer systems the ability to learn from data.

In most experiments, machine learning is tested using perfect data. In this instance, Dai and his dissertation advisor, Dr. Daniel Berleant, professor of information science, are corrupting image files to appear more grainy and pixelated.

“How can we evaluate machine learning when data quality has problems? In the real world, imperfect datasets are the majority,” Berleant explained.

Thanks to funding from the College of Engineering and Information Technology, Dai traveled to Stanford University on July 18 to attend a technical meeting for MLPerf researchers and was able to discuss his research proposal to develop an imperfect dataset benchmark to test machine learning systems.

The MLPerf effort represents a diverse group of universities and industry companies, like Google, Intel, Harvard, and Stanford, that has released the eponymous MLPerf, a new benchmarking tool used to measure the speed of machine learning software and hardware.

The MLPerf effort aims to build a common set of benchmarks that enables the machine learning field to measure system performance eventually for both training and inference from mobile devices to cloud services. An accepted benchmark suite will benefit researchers, developers, builders of machine learning frameworks, cloud service providers, hardware manufacturers, application providers, and end users.

“The important thing is that we want to change from the use of perfect data to imperfect datasets instead,” Dai said.

For his dissertation research, Dai plans to create the imperfect data sets, test the performance of the algorithms, and come up with solutions if the algorithms do not work. The ultimate goal of the research is to develop a mutated dataset that can be used as a benchmark researchers can apply in other studies, such as self-driving vehicles, computer vision, and face recognition.

Dai earned a master’s degree in information science from UA Little Rock in 2016. Before moving to the U.S., he worked as a senior data scientist engineer at IBM China and IBM China Lab for seven years.

Dai has published academic papers and books in the U.S. and China. In the U.S., he has published four conference papers, with a fifth currently under review, and has also received three awards from the UA Little Rock Student Research and Creative Works Expo in 2016 and 2017, and a research award from the Donaghey College of Engineering and Information Technology in 2017. In China, he published two database books and five technical papers, released three patents, and twice won the Excellent Employee Award and Outstanding Instructor Award from IBM.

In the upper right photo, as part of his research on machine learning, Dai has told the computer to corrupt part of this image of his face. Photo by Ben Krain.